VMware vSphere BitFusion介绍

VMware在2019年8月收购了BitFusion,后者是硬件加速设备虚拟化领域的先驱,重点关注GPU技术。

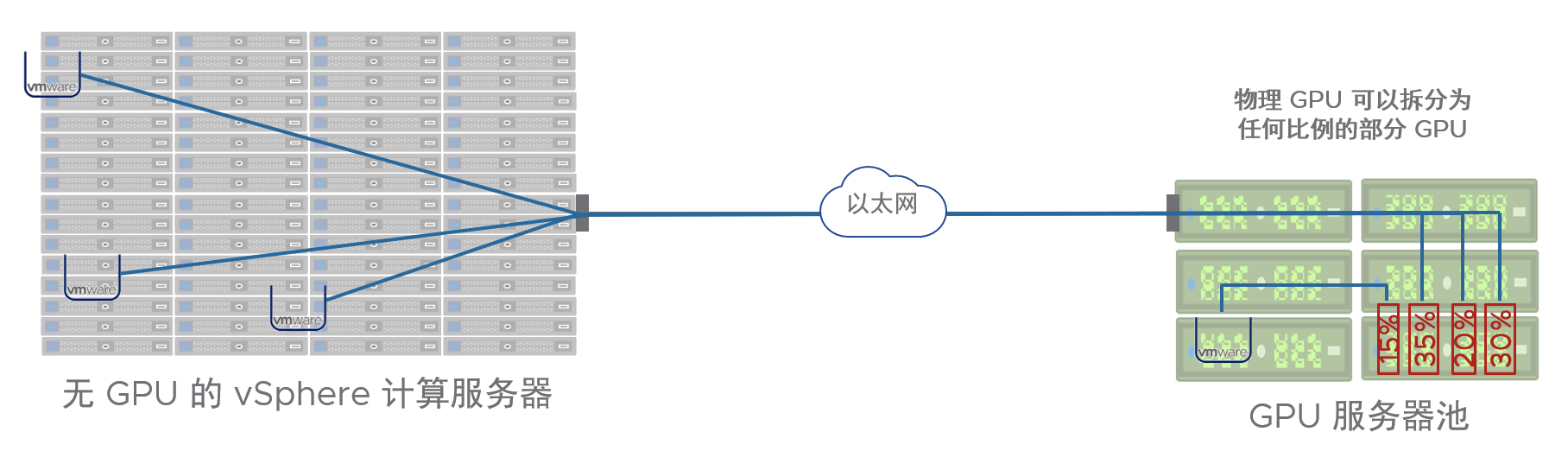

BitFusion 提供了一个软件平台,可将特定的物理资源与环境中所连接的服务器分离,通过网络的方式让多个Client共享GPU计算能力。

VMware一直没有自己的GPU虚拟化技术,通过BitFusion终于补上了GPU虚拟化这块空白。

Bitfusion client运行AI / ML应用程序,该应用程序通过网络共享Bitfunsion Server上的直通GPU。

Bitfusion 可以将GPU内存划分为任意大小不同的切片,然后分配给不同的客户端以供同时使用。

Bitfusion 可以为VM和Container远程提供GPU计算能力。

Bitfusion 的 GPU 资源池有点类似于存储区域网络 SAN (Storage Attached Network),所以也有人把它叫作 GPU Attached Network。

安装

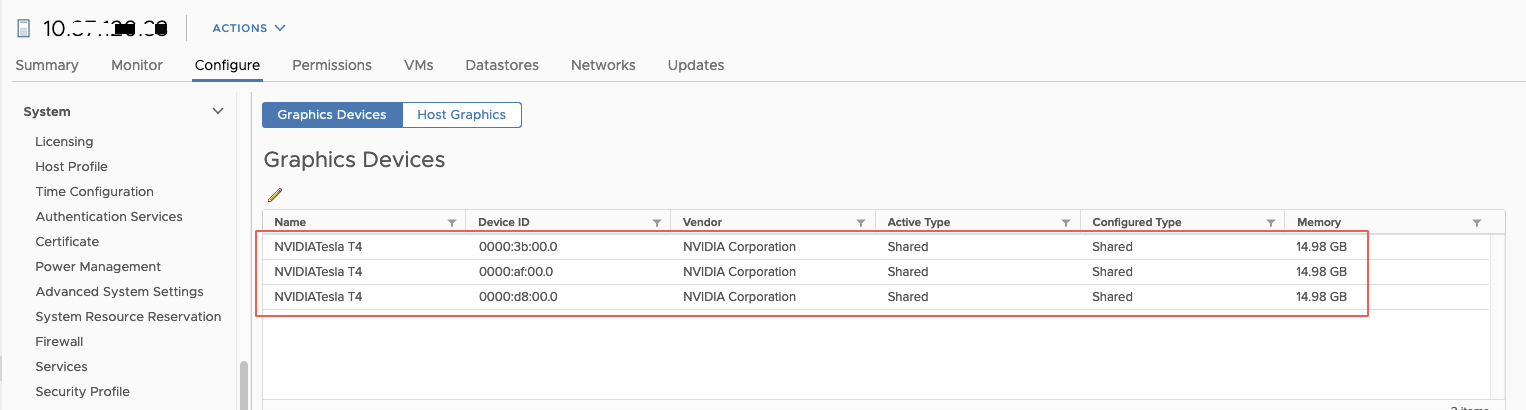

1. 配置NVIDIA显卡直通模式

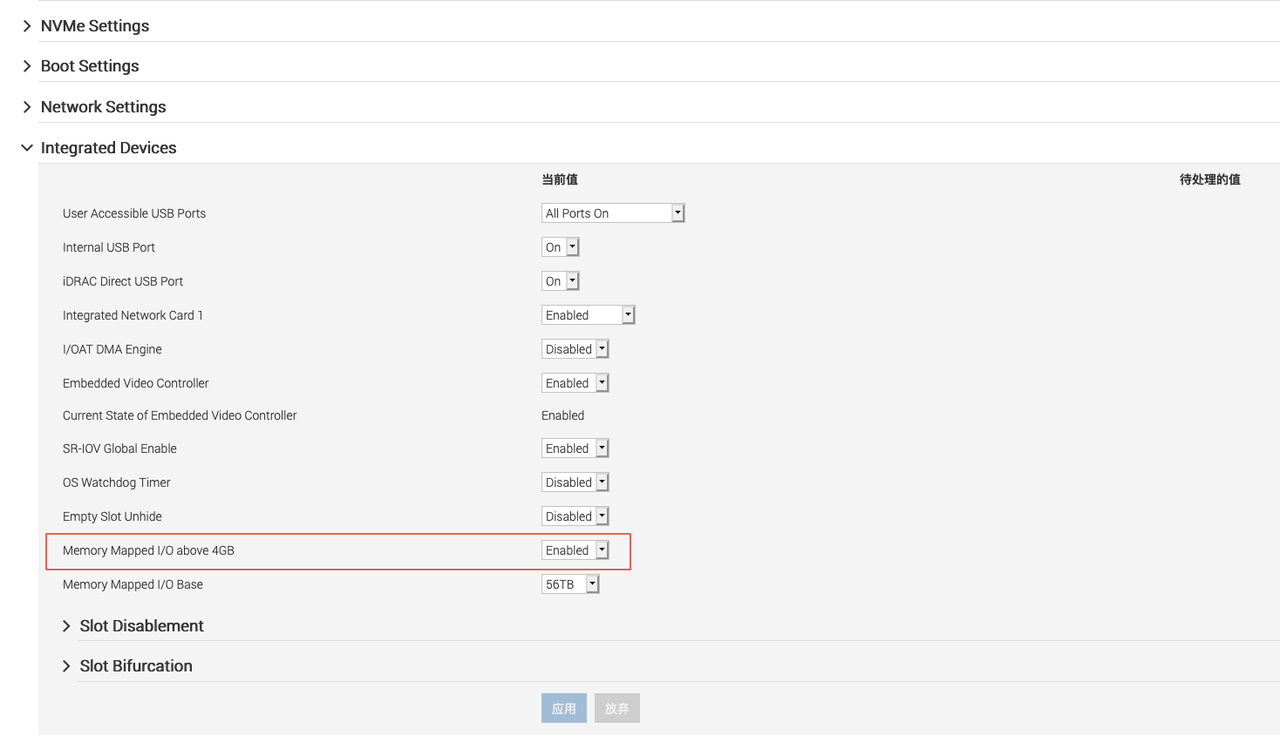

确认NVIDIA显卡所在ESXi主机BIOS中Memory Mapped I/O above 4GB启用

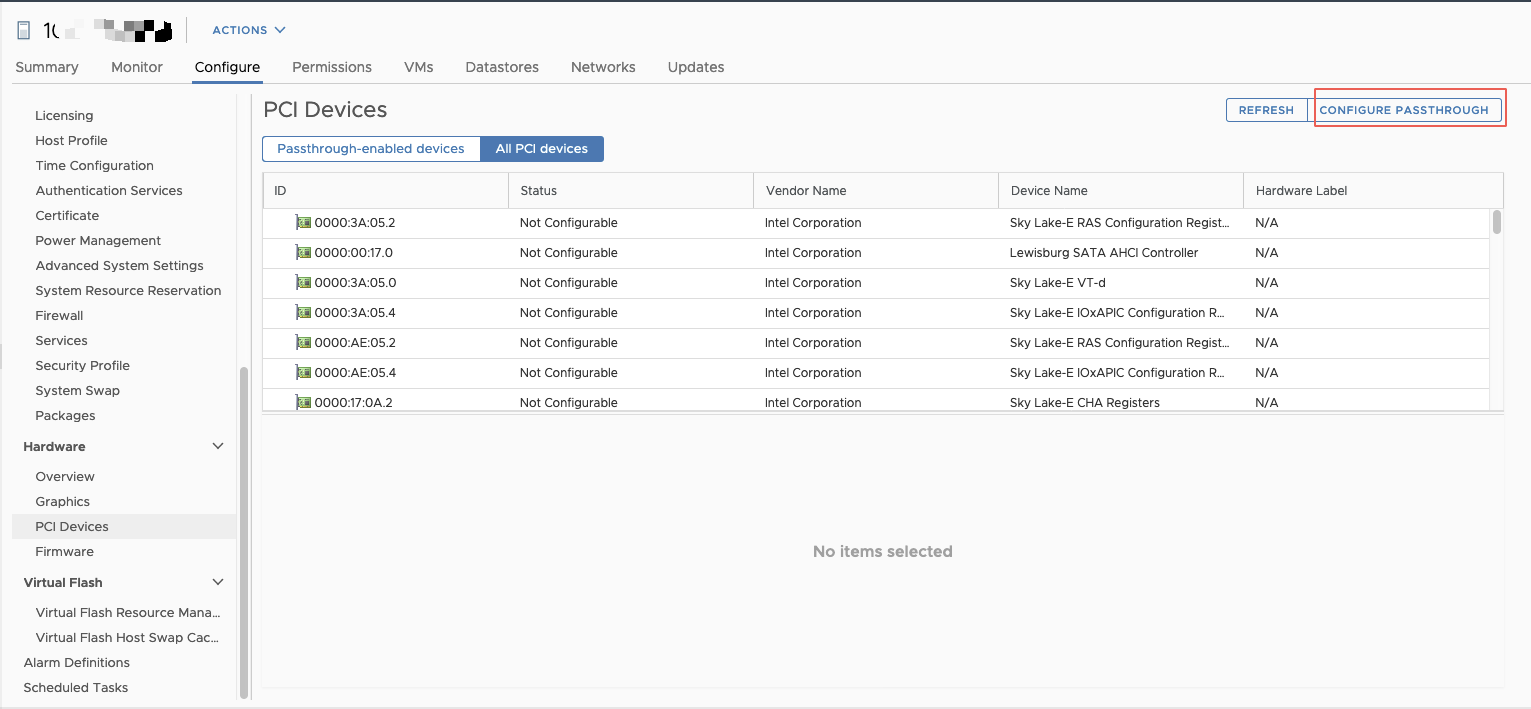

配置ESXI主机上的显卡为直通模式,因为需要配置到BitFusion虚机上;

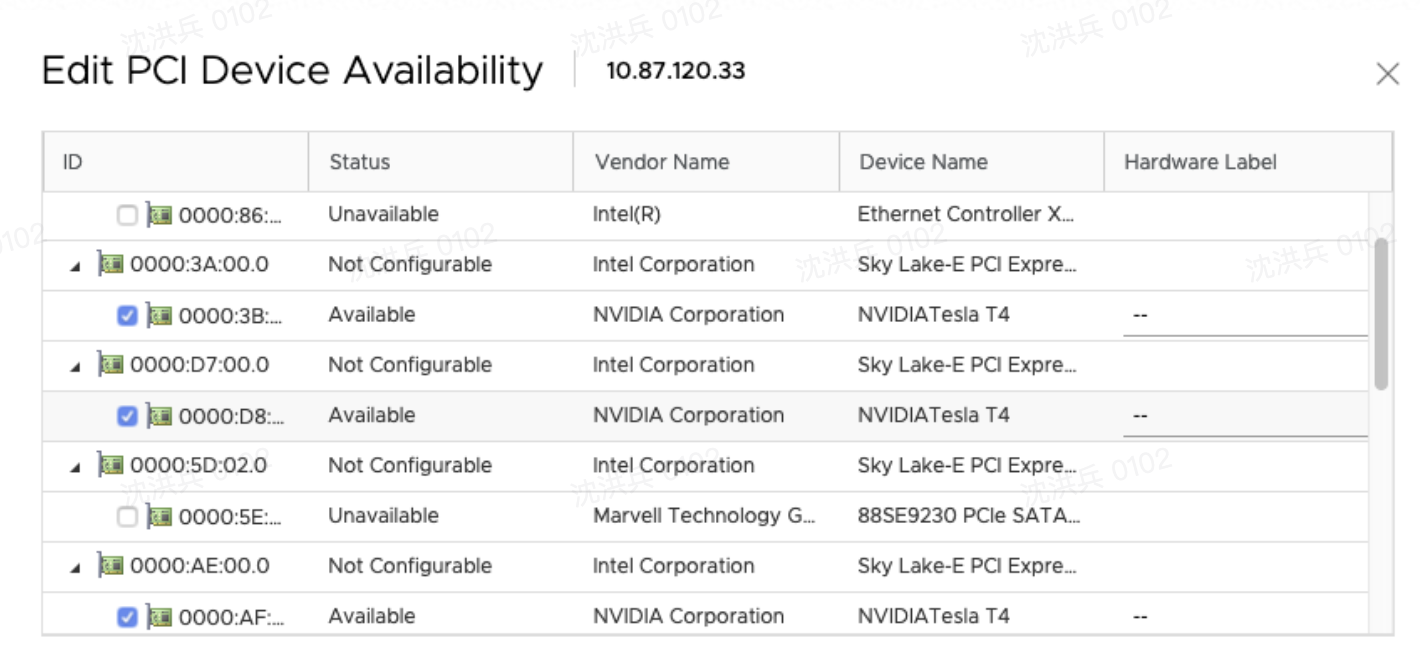

勾选所有NVIDIA显卡;

配置完成后需要重启一次,重启后就可以看到直通显卡。

2. 部署 BitFusion Server

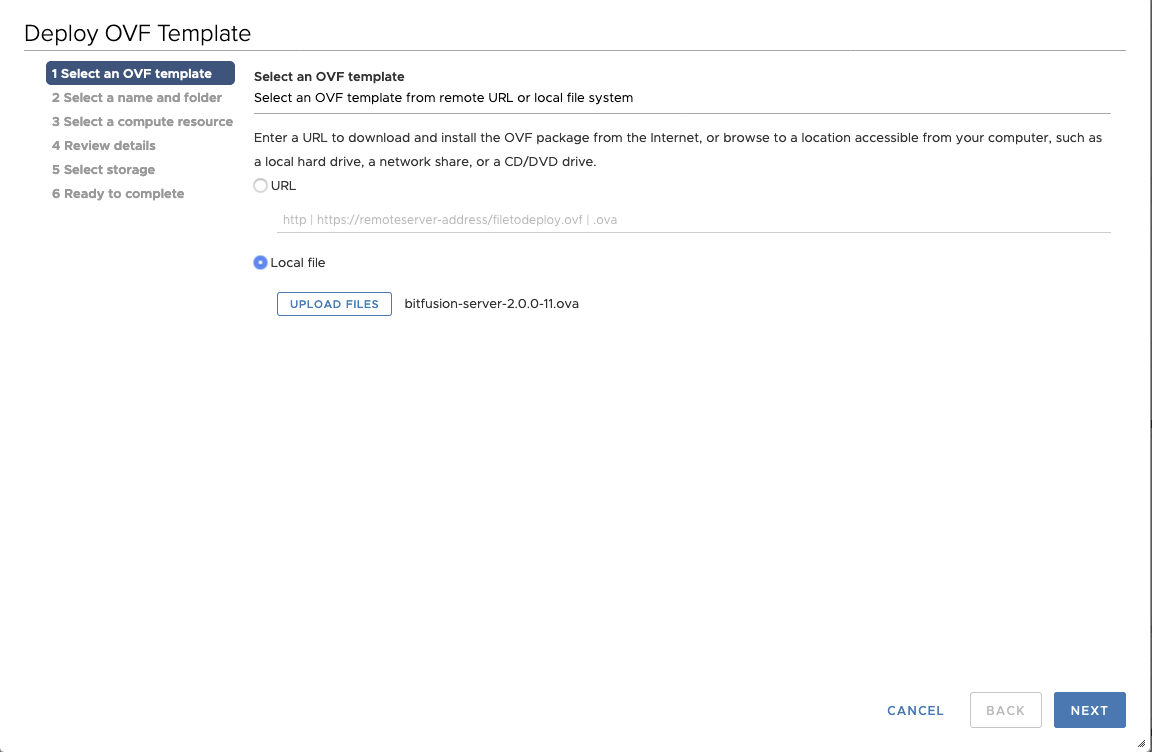

官网下载Bitfusion OVA安装包

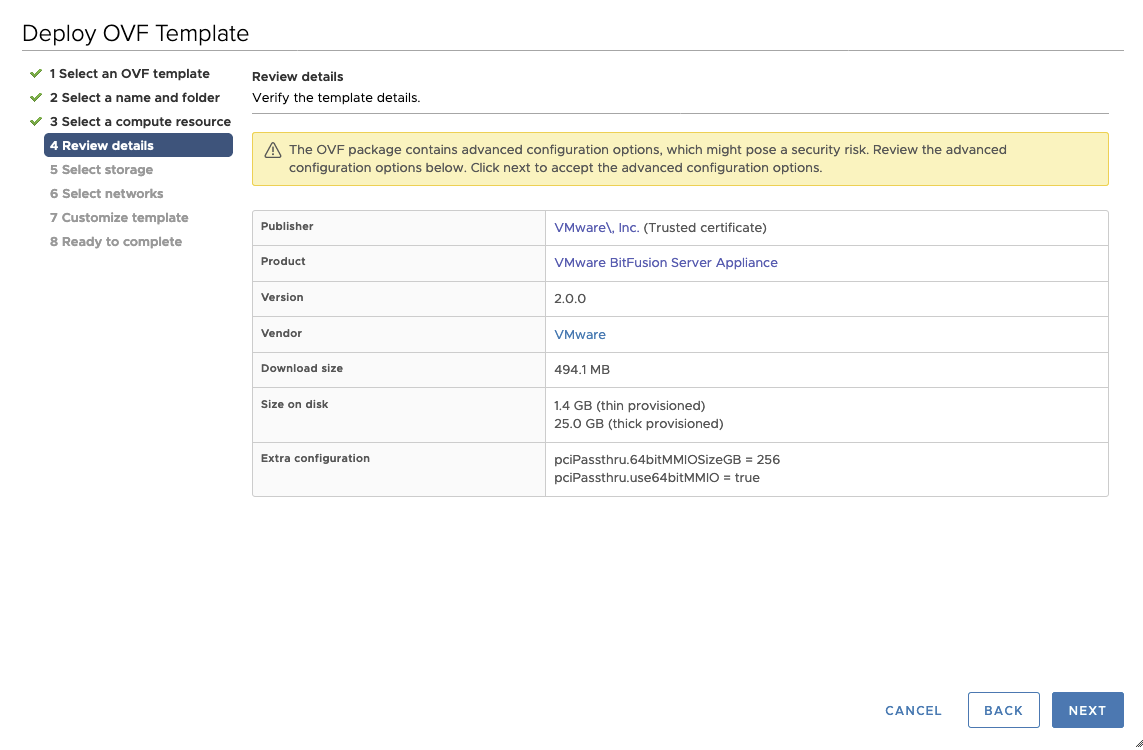

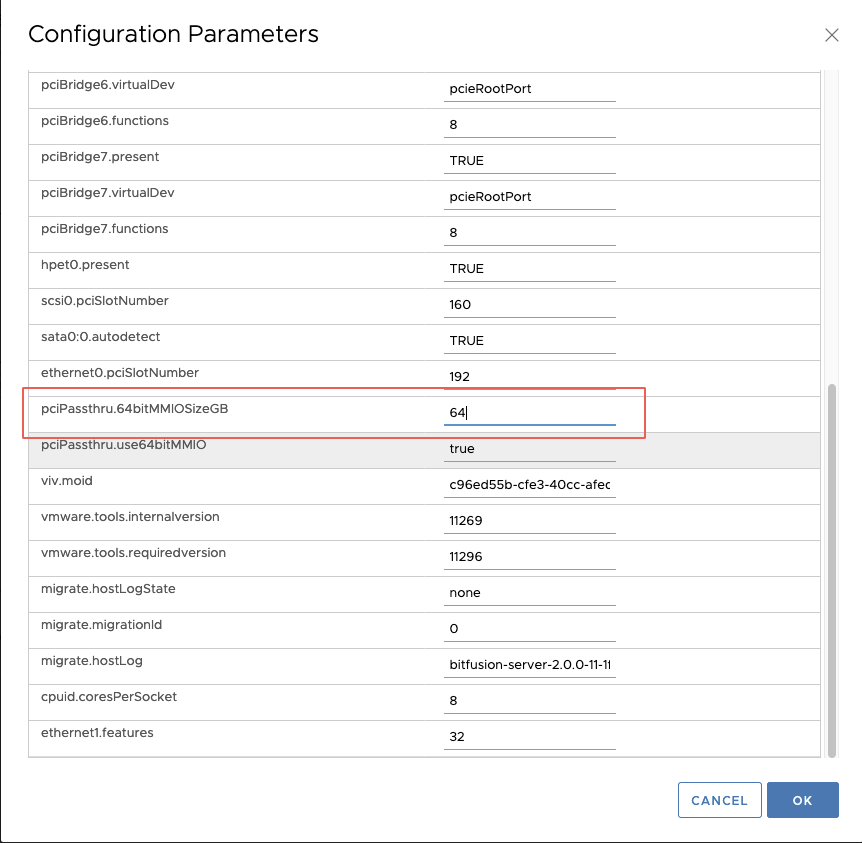

这里注意 Extra configuration里的pciPassthru.64bitMMIOSizeGB = 256,部署完bitfusion虚机后,需要根据实际显卡内存系数进行调整。

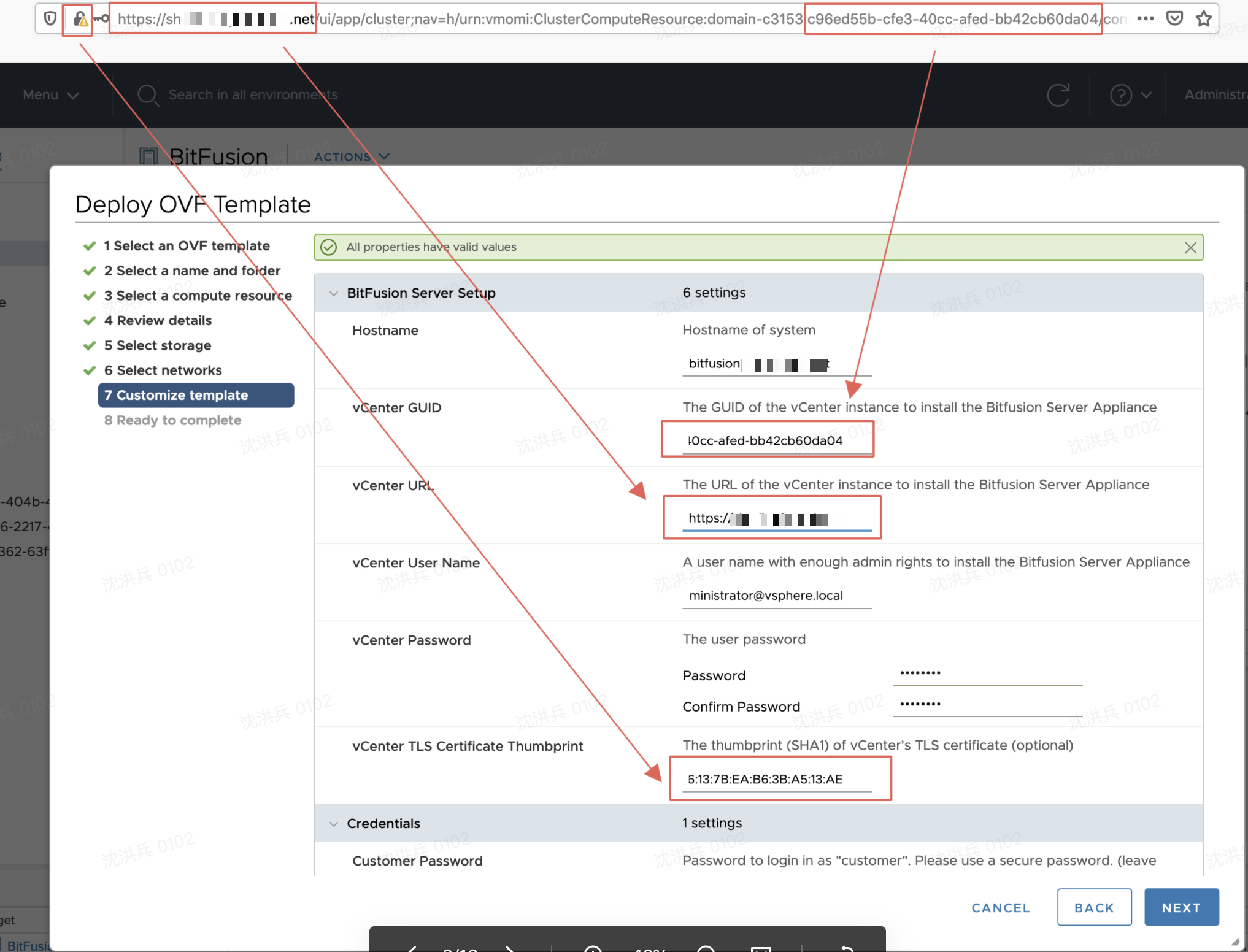

注意vCenter GUID, vCenter URL和vCenter TLS Certificate Thumbprint

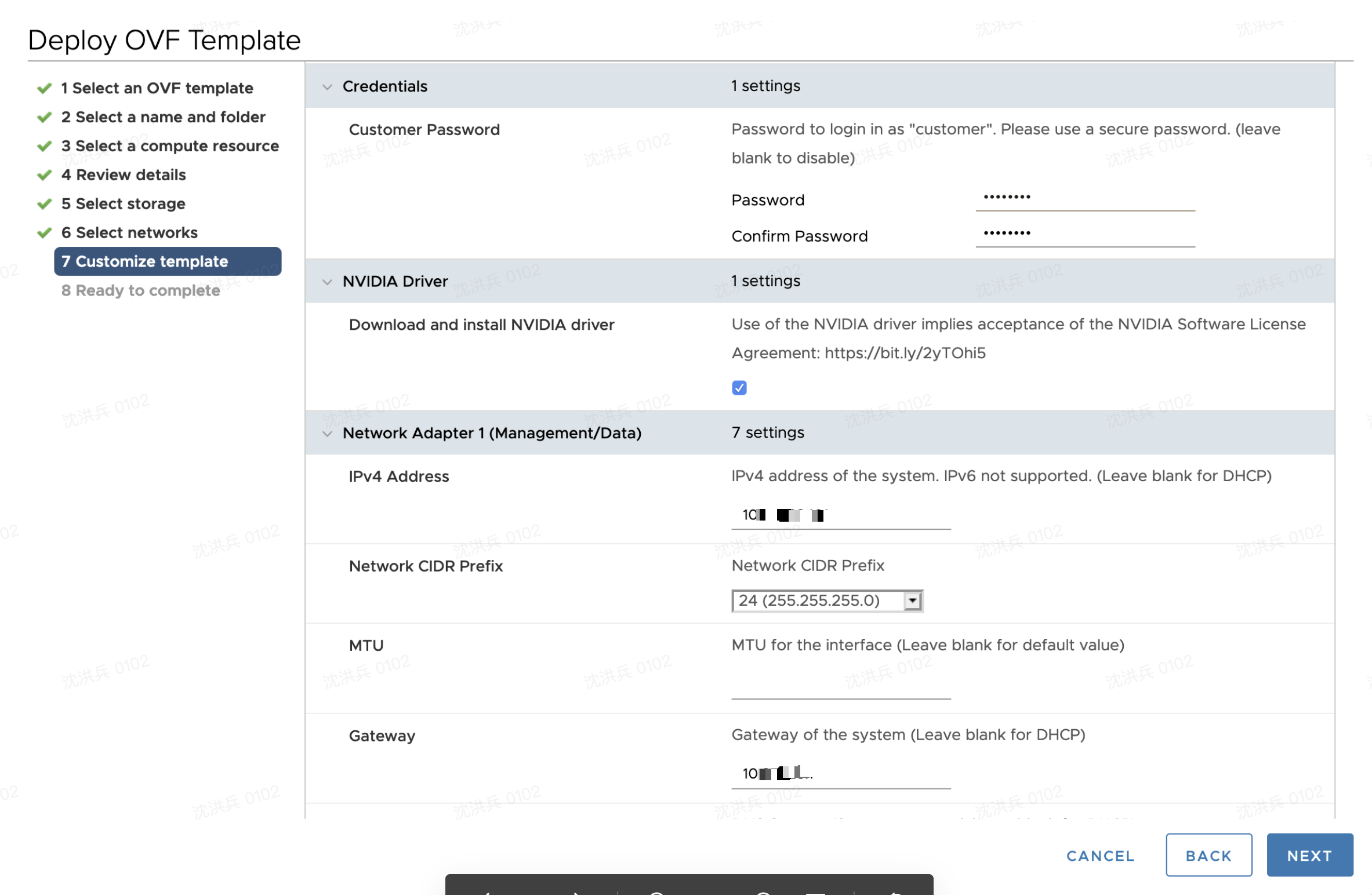

配置密码和IP地址等信息(建议MTU配置为9000)

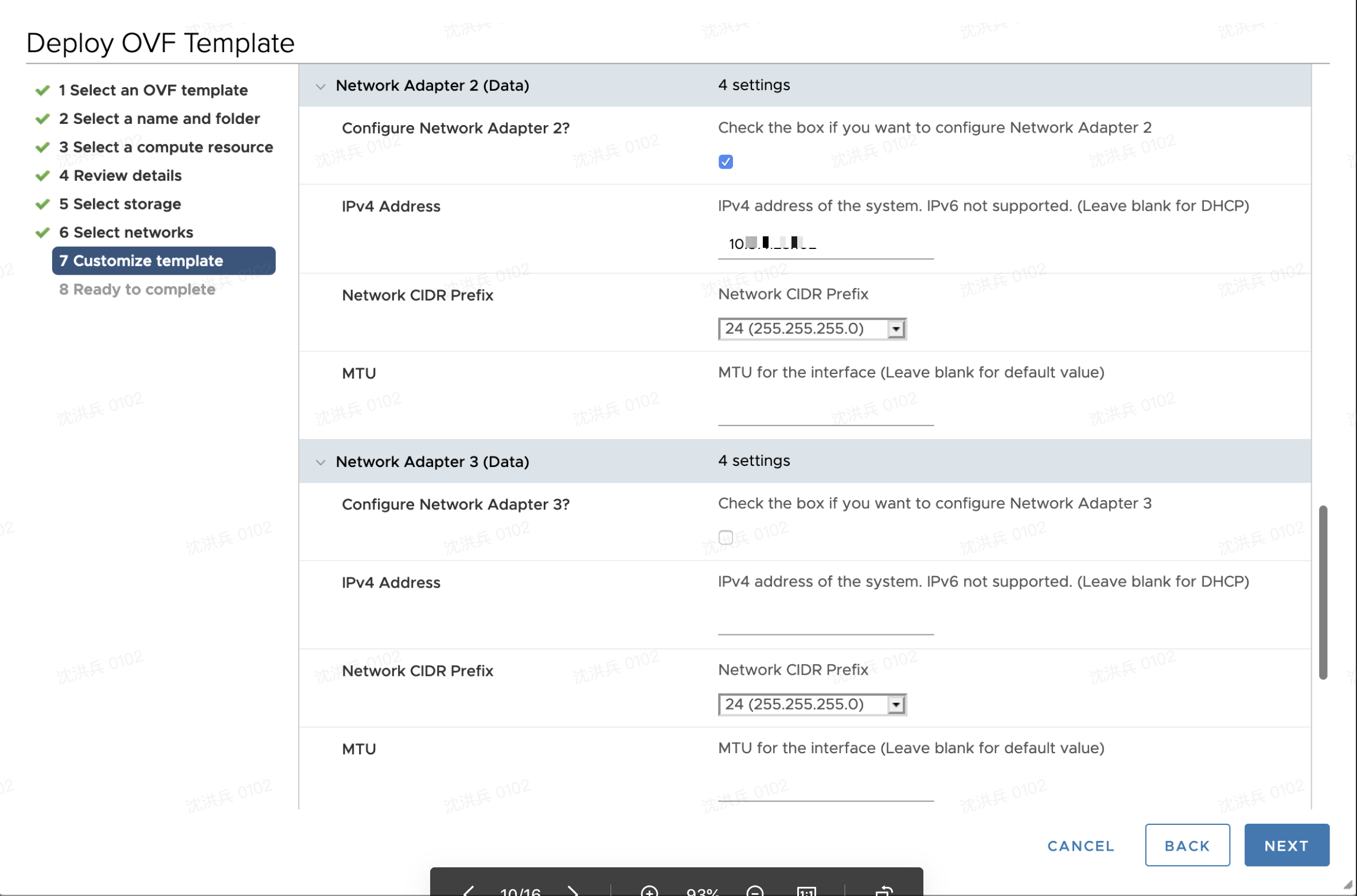

可以配置多个端口用于数据传输(可选)

OVF导入完成后,不要开机。

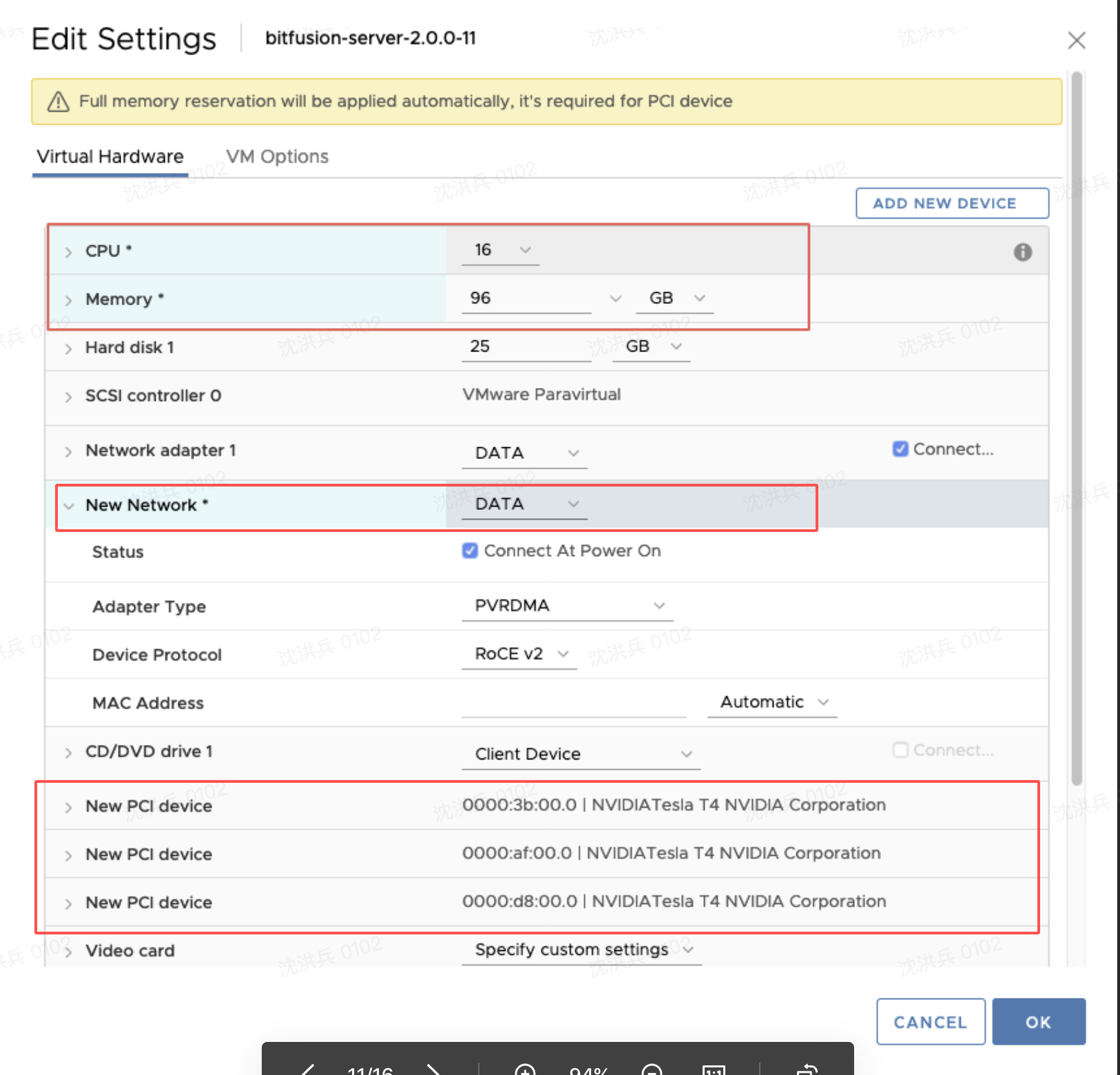

编辑虚机:

1. 调整CPU和内存,内存大小为显卡总内存 * 1.5

2. 添加网卡(默认只有1块网卡)

3. 添加所有直通显卡

修改虚机高级参数

pciPassthru.64bitMMIOSizeGB={n}

where n equals (num-cards * size-of-card-in-GB) rounded up to NEXT power of 2:

example A: 2 16GB cards => 2 * 16 => 32 => rounded to next power of 2 = 64

example B: 3 16GB cards => 3 * 16 => 48 => rounded to next power of 2 = 64

配置完成后打开BitFusion电源,等待10分钟左右,BitFusion会自动注册Plugin,刷新浏览器。

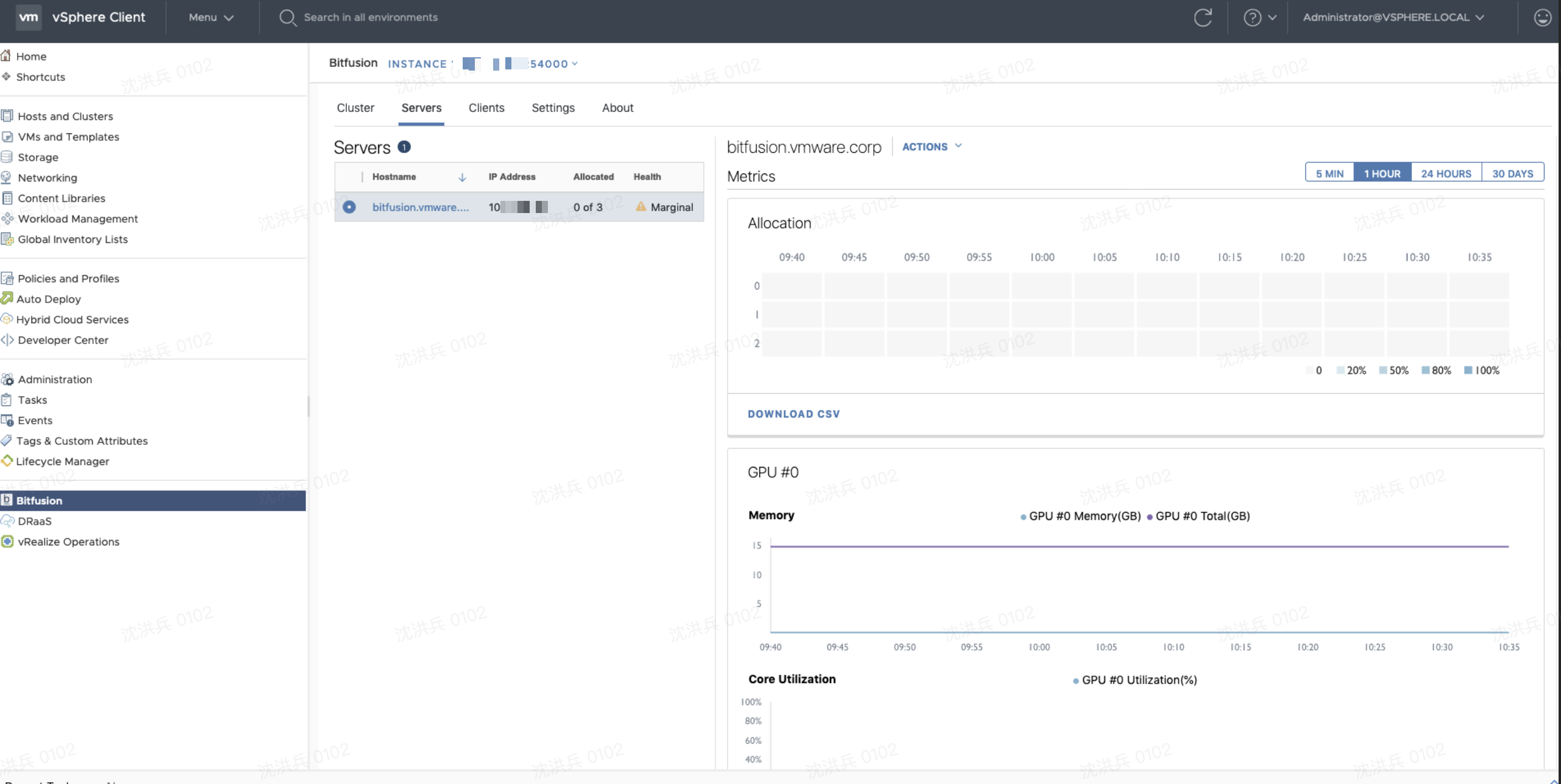

打开BitFusion管理界面

注意BitFusion需要ESXi分配vSphere Enterprise Plus License,否则会提示License无效。

3. 部署 BitFusion Client

注意:目前仅支持RHEL/Centos 7, Ubuntu 18.04/16.04

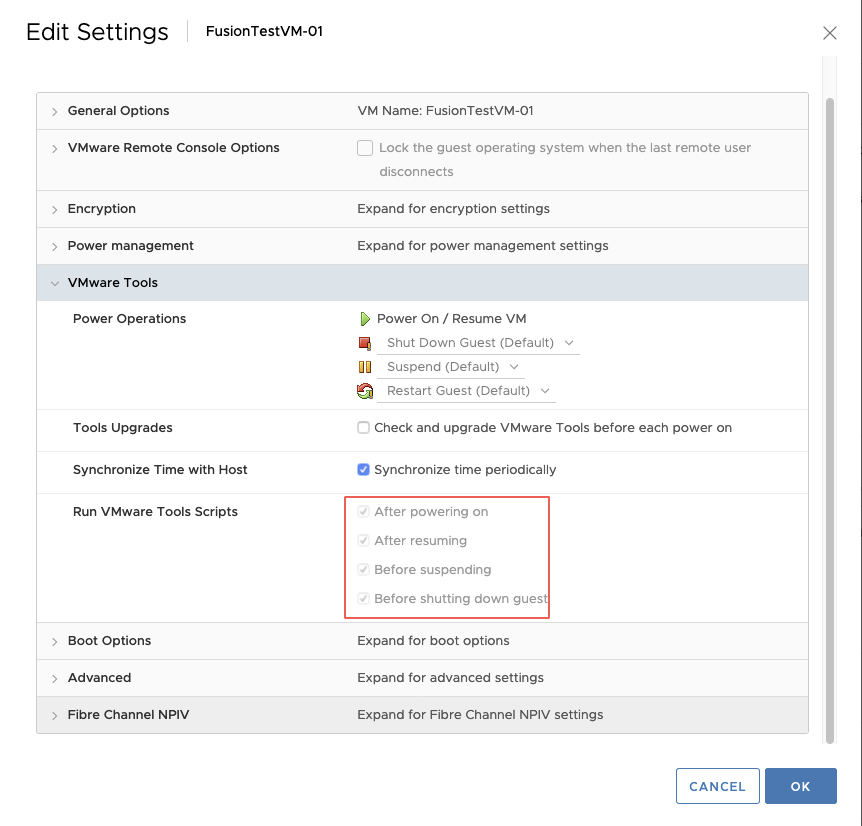

部署一台CentOS7虚机,不要开机,确认Run VMware Tools Scripts全部勾选;

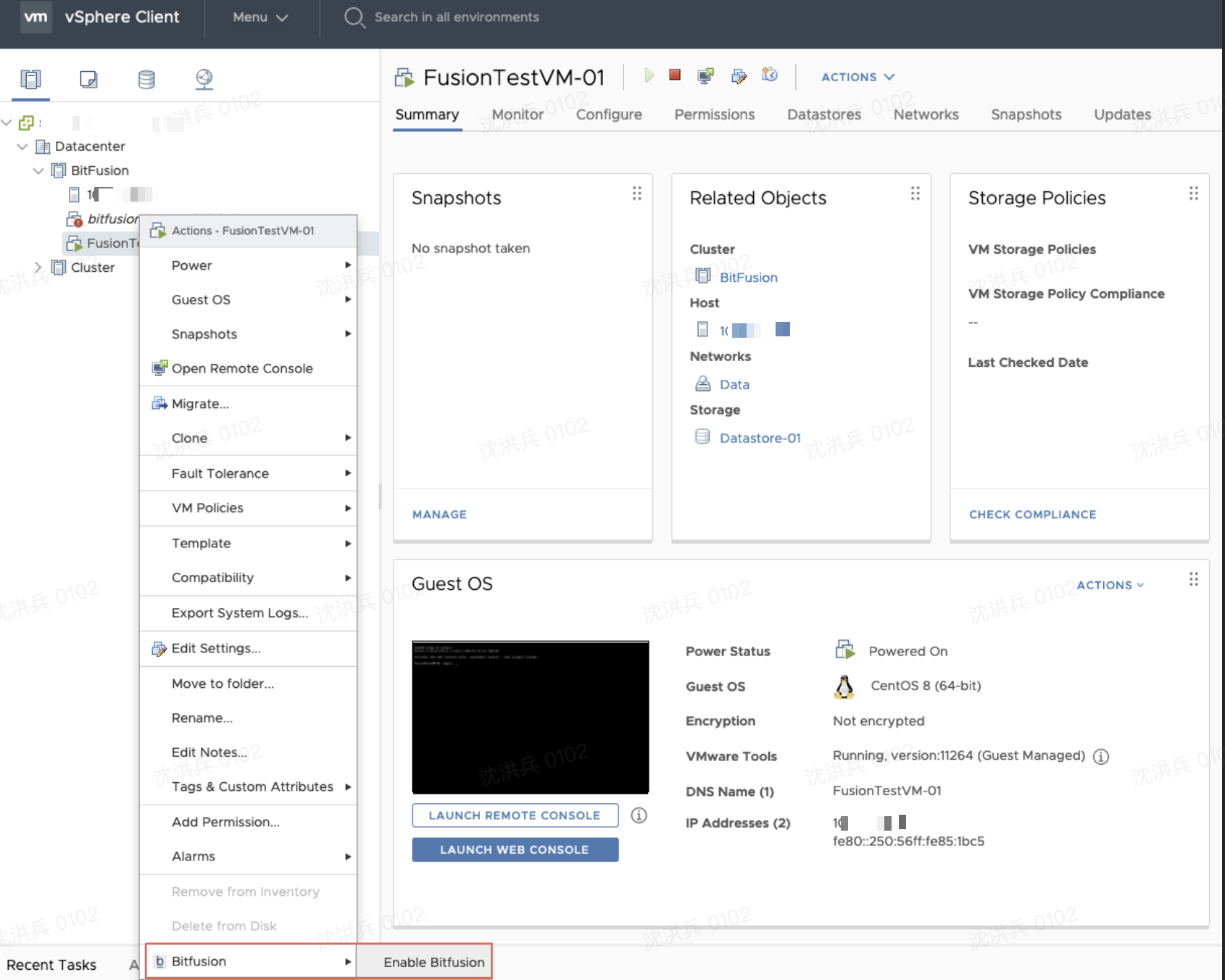

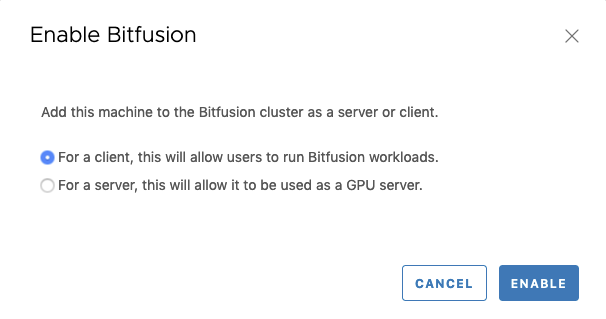

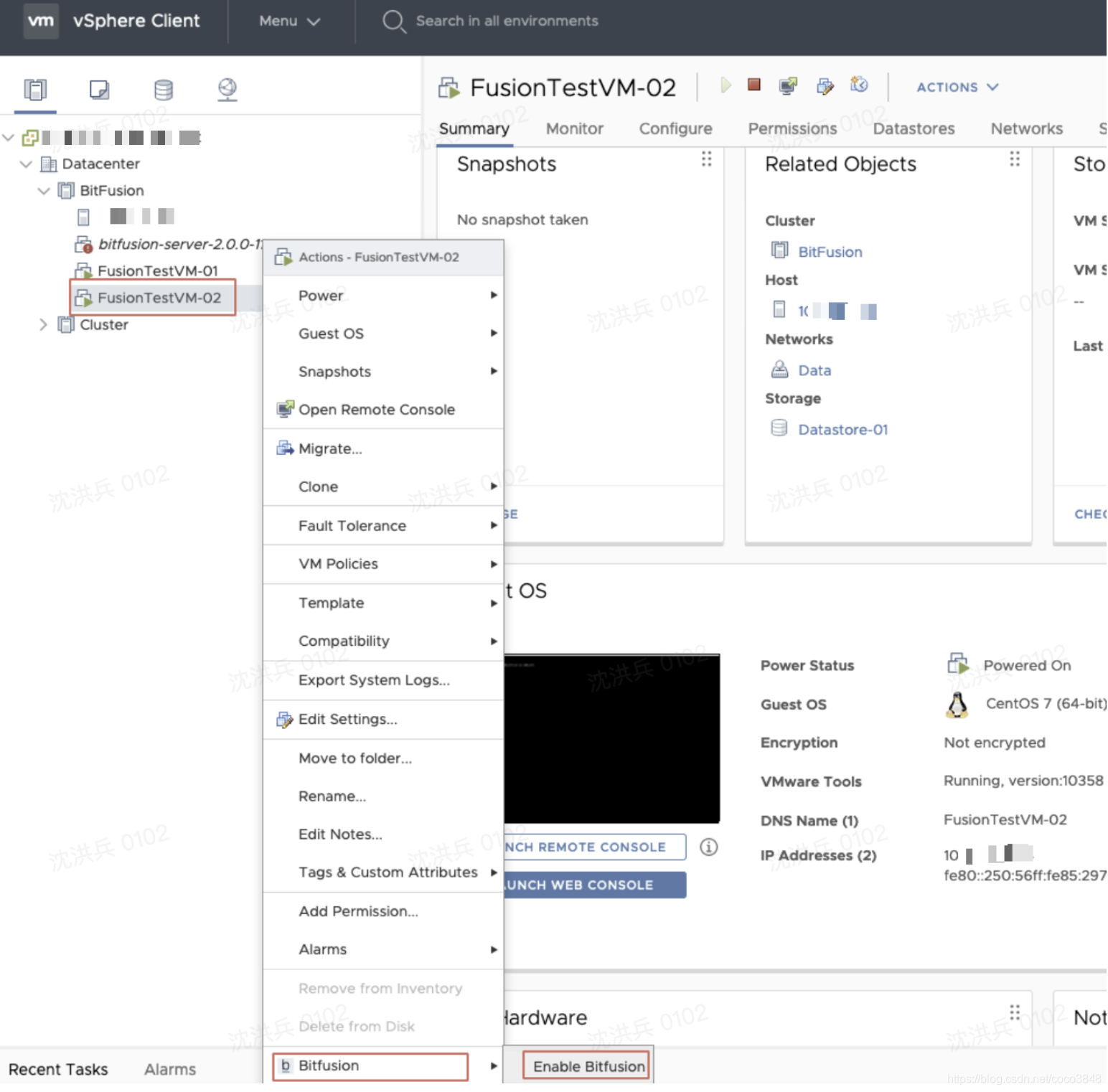

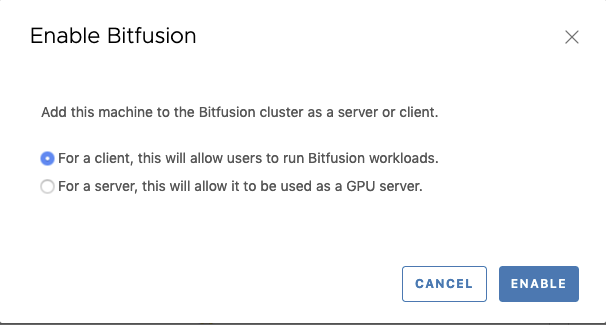

右击CentOS 7 虚机,启用bitfusion client;

选择 For a client;

将CentOS7虚机开机,然后执行以下命令安装bitfusion client

安装bitfusion-client# yum install -y epel-release# rpm --import https://packages.vmware.com/bitfusion/vmware.bitfusion.key# yum install -y https://packages.vmware.com/bitfusion/centos/7/bitfusion-client-centos7-2.0.0-11.x86_64.rpm将需要使用bitfusion的账号加入bitfusion group,我这里使用root账号

# Example: add “root” to the bitfusion group $ sudo usermod -aG bitfusion root测试bitfusion client是否部署成功;

# connect to bitfusion and list all gpu$ bitfusion list_gpus - server 0 [10.10.10.11:56001]: running 0 tasks |- GPU 0: free memory 15109 MiB / 15109 MiB |- GPU 1: free memory 15109 MiB / 15109 MiB |- GPU 2: free memory 15109 MiB / 15109 MiB如果成功显示bitfusion server上配置的直通显卡信息,恭喜你配置成功。

测试

1. 测试步骤

主要测试步骤:

• Create a VM

• Enable VM for Bitfusion

• Install Bitfusion Client

• Install CUDA 10.0

• Install CuDNN 7

• Install python3, if needed (CentOS)

• Install TensorFlow 1.13.1

• Install TensorFlow benchmarks (branch cnn_tf_v1.13_compatible)

• Run TensorFlow benchmarks

2. Enable VM for Bitfusion

创建一台CentOS7 虚机,不要开机,右击虚机启用bitfusion

选择For a client.

3. Install Bitfusion Client

将CentOS7开机,然后执行以下命令

# Install bitfusion client$ yum install -y epel-release$ rpm --import https://packages.vmware.com/bitfusion/vmware.bitfusion.key$ yum install -y https://packages.vmware.com/bitfusion/centos/7/bitfusion-client-centos7-2.0.0-11.x86_64.rpm # Add user to bitfusion group$ usermod -aG bitfusion root # Confirm user belongs to bitfusion group$ groupsroot bitfusion # Test Bitfusion $ bitfusion list_gpus - server 0 [10.10.10.10:56001]: running 0 tasks |- GPU 0: free memory 15109 MiB / 15109 MiB |- GPU 1: free memory 15109 MiB / 15109 MiB |- GPU 2: free memory 15109 MiB / 15109 MiB安装完成后,自动注册到bitfusion server中;

4. 安装CUDA

CUDA is the NVIDA library allows programmatic access to their GPUs. It will be used by the TensorFlow benchmarks.

$ mkdir bitfusion$ cd bitfusion # install cuda-repo$ wget -P /etc/yun.repos.d/ https://developer.download.nvidia.com/compute/cuda/repos/rhel7/x86_64/cuda-rhel7.repo $ yum clean all$ yum install -y cuda-10-0获取显卡设备信息

$ bitfusion run --num_gpus 1 nvidia-smiRequested resources:Server List: 10.10.10.101:56001Client idle timeout: 0 minWed Jul 29 12:07:35 2020 +-----------------------------------------------------------------------------+| NVIDIA-SMI 450.51.06 Driver Version: 440.64.00 CUDA Version: 10.2 ||-------------------------------+----------------------+----------------------+| GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC || Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. || | | MIG M. ||===============================+======================+======================|| 0 Tesla T4 Off | 00000000:04:00.0 Off | 0 || N/A 28C P8 9W / 70W | 0MiB / 15109MiB | 0% Default || | | ERR! |+-------------------------------+----------------------+----------------------+ +-----------------------------------------------------------------------------+| Processes: || GPU GI CI PID Type Process name GPU Memory || ID ID Usage ||=============================================================================|| No running processes found |+-----------------------------------------------------------------------------+5. 安装CuDNN

CuDNN is the Deep Neural Network library from NVIDIA. The TensorFlow benchmarks you will run later will require this library too.

需要到 https://developer.nvidia.com/cudnn 创建账号并下载 libcudnn7并安装

$ sudo rpm -ivh libcudnn7-7.6.5.32-1.cuda10.0.x86_64.rpmPreparing... ################################# [100%]Updating / installing... 1: libcudnn7-7.6.5.32-1.cuda10.0 ################################# [100%] $ sudo ldconfig # update libraries list $ ldconfig -p | grep cudnn # to see if it is installed libcudnn.so.7 (libc6,x86-64) => /lib64/libcudnn.so.76. 安装Python3和TensorFlow

$ yum install python3$ pip3 install tensorflow-gpu==1.13.17. 安装TensorFlow Benchmarks

The benchmarks are open source ML applications designed to test performance on the TensorFlow framework.

$ cd ~/bitfusion $ git clone https://github.com/tensorflow/benchmarks.git $ cd benchmarks $ git branch -a * master remotes/origin/HEAD -> origin/master remotes/origin/cnn_tf_v1.10_compatible ... remotes/origin/cnn_tf_v1.13_compatible ... $ git checkout cnn_tf_v1.13_compatible Branch cnn_tf_v1.13_compatible set up to track remote branch cnn_tf_v1.13_compatible from origin. Switched to a new branch ‘cnn_tf_v1.13_compatible’ $ git branch * cnn_tf_v1.13_compatible master8. 执行BitFunsion测试

在没有GPU的情况下执行TensorFlow测试,测试结果如下,平均处理图片为每秒805.71张。

$ python3 ./benchmarks/scripts/tf_cnn_benchmarks/tf_cnn_benchmarks.py ...Running warm upDone warm upStep Img/sec total_loss1 images/sec: 805.8 +/- 0.0 (jitter = 0.0) 14.29910 images/sec: 803.0 +/- 3.3 (jitter = 4.5) 14.29920 images/sec: 803.4 +/- 2.5 (jitter = 6.5) 14.29930 images/sec: 806.4 +/- 2.1 (jitter = 9.4) 14.29940 images/sec: 803.1 +/- 2.7 (jitter = 11.2) 14.29850 images/sec: 801.5 +/- 2.8 (jitter = 10.2) 14.29860 images/sec: 802.6 +/- 2.4 (jitter = 8.9) 14.29970 images/sec: 804.4 +/- 2.1 (jitter = 9.0) 14.29980 images/sec: 805.7 +/- 1.9 (jitter = 8.7) 14.29890 images/sec: 806.3 +/- 1.7 (jitter = 8.5) 14.298100 images/sec: 806.9 +/- 1.6 (jitter = 8.1) 14.298----------------------------------------------------------------total images/sec: 805.71----------------------------------------------------------------通过BitFusion来执行TensorFlow测试,分配1个GPU显存资源,测试结果如下,平均处理图片为每秒7013.81张。

# bitfusion allocation 1 gpu$ bitfusion run --num_gpus 1 -- python3 ./benchmarks/scripts/tf_cnn_benchmarks/tf_cnn_benchmarks.py ...Done warm upStep Img/sec total_loss1 images/sec: 5947.0 +/- 0.0 (jitter = 0.0) 14.29910 images/sec: 6015.8 +/- 19.5 (jitter = 51.8) 14.29920 images/sec: 6091.0 +/- 20.4 (jitter = 120.5) 14.29930 images/sec: 6111.4 +/- 15.0 (jitter = 62.6) 14.29940 images/sec: 6126.5 +/- 12.5 (jitter = 57.8) 14.29850 images/sec: 6226.8 +/- 73.0 (jitter = 66.4) 14.29860 images/sec: 6497.5 +/- 115.6 (jitter = 92.3) 14.29970 images/sec: 6705.9 +/- 122.1 (jitter = 121.1) 14.29980 images/sec: 6874.3 +/- 120.4 (jitter = 242.2) 14.29890 images/sec: 7014.0 +/- 116.0 (jitter = 374.4) 14.298100 images/sec: 7133.7 +/- 111.3 (jitter = 404.1) 14.298----------------------------------------------------------------total images/sec: 7013.81----------------------------------------------------------------9. GPU分片测试

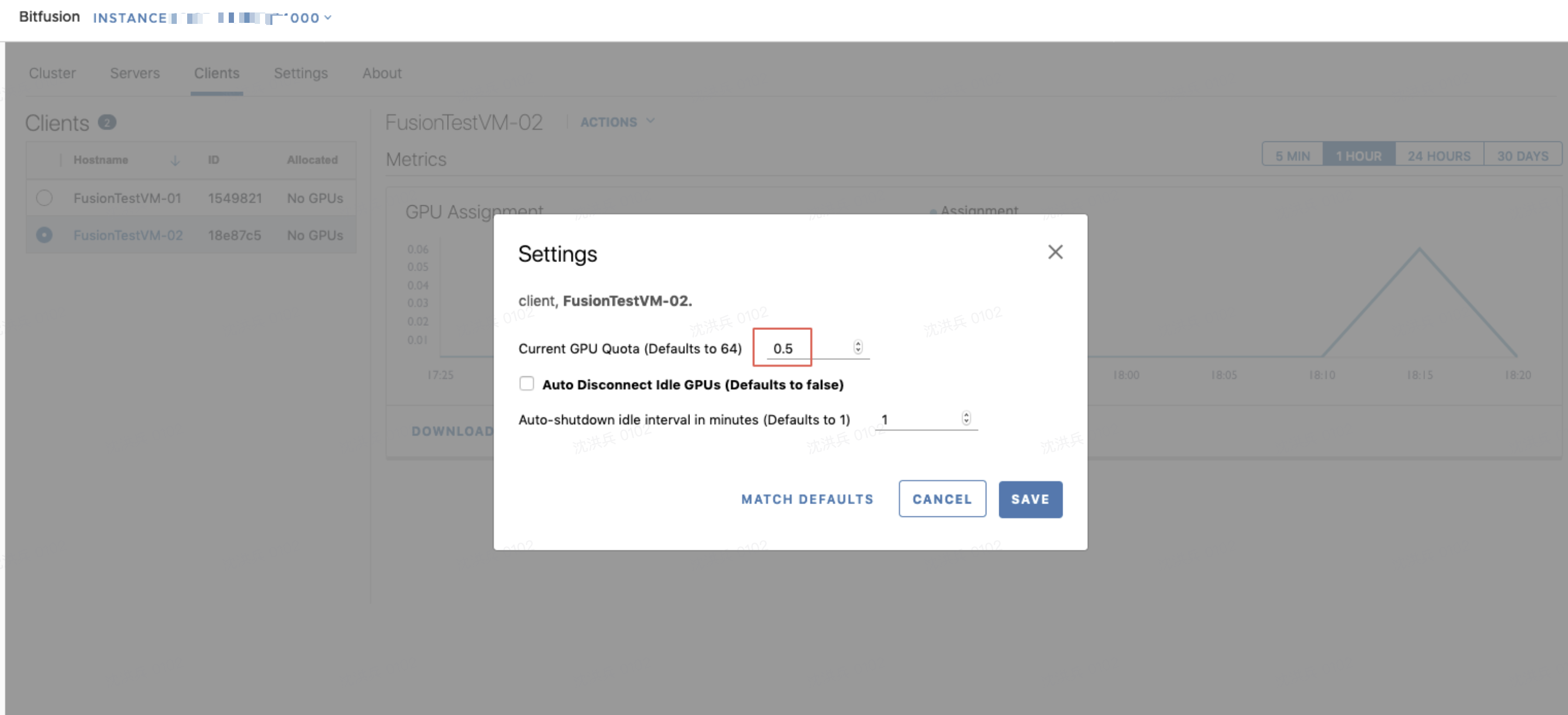

在BitFusion管理页面中为Client显示为0.5个GPU

执行测试命令,会提示错误

$ bitfusion run --num_gpus 1 -- python3 ./benchmarks/scripts/tf_cnn_benchmarks/tf_cnn_benchmarks.py Error requesting gpus: Error starting dispatcher: Error sending heartbeat: Error when sending cluster session information: ErrorOverQuota: client 18e87c5 allocation over quota: 0.50 quota, 1.00 allocatedError starting dispatcher: Error sending heartbeat: Error when sending cluster session information: ErrorOverQuota: client 18e87c5 allocation over quota: 0.50 quota, 1.00 allocated修改为0.5个分片,运行成功

$ bitfusion run --num_gpus 1 --partial 0.5 -- python3 ./benchmarks/scripts/tf_cnn_benchmarks/tf_cnn_benchmarks.py Requested resources:Server List: 10.10.10.11:56001Client idle timeout: 1 min...pciBusID: 0000:00:00.0totalMemory: 7.38GiB freeMemory: 6.99GiB...2020-07-29 18:25:16.604751: I tensorflow/stream_executor/dso_loader.cc:152] successfully opened CUDA library libcublas.so.10.0 locallyDone warm upStep Img/sec total_loss1 images/sec: 5998.6 +/- 0.0 (jitter = 0.0) 14.29910 images/sec: 6005.8 +/- 12.4 (jitter = 32.3) 14.29920 images/sec: 6009.2 +/- 7.4 (jitter = 35.3) 14.29930 images/sec: 6001.9 +/- 7.1 (jitter = 40.8) 14.29940 images/sec: 6123.6 +/- 89.9 (jitter = 50.5) 14.29850 images/sec: 6436.6 +/- 130.8 (jitter = 66.8) 14.29860 images/sec: 6667.9 +/- 132.9 (jitter = 113.8) 14.29970 images/sec: 6849.3 +/- 127.8 (jitter = 209.3) 14.29980 images/sec: 6996.1 +/- 120.6 (jitter = 486.3) 14.29990 images/sec: 7131.8 +/- 116.1 (jitter = 406.1) 14.298100 images/sec: 7289.4 +/- 117.6 (jitter = 449.9) 14.298----------------------------------------------------------------total images/sec: 7165.57----------------------------------------------------------------10. 测试总结

优点

- 使用简单,Client调用bitfusion GPU资源时和语言无关,直接在程序前加上: bitfusion run –num_gpus {n} –partial {n} — 即可。

- GPU共享,可以供多台VM通过网络调用GPU计算资源,Client用完GPU资源后就会立即释放,其他Client可以继续使用。

- 可以将GPU内存划分为任意大小不同的切片,然后分配给不同的客户端以供同时使用。

- 不需要NVIDIA许可。

缺点

- 目前仅支持 RHEL/Centos 7, Ubuntu 18.04/16.04